The line between AI agents and chatbots blurs more each month. As of 2026, both leverage the same large language models, but their capabilities couldn’t be more different. Understanding these differences is essential for choosing the right monetization strategy for your AI application.

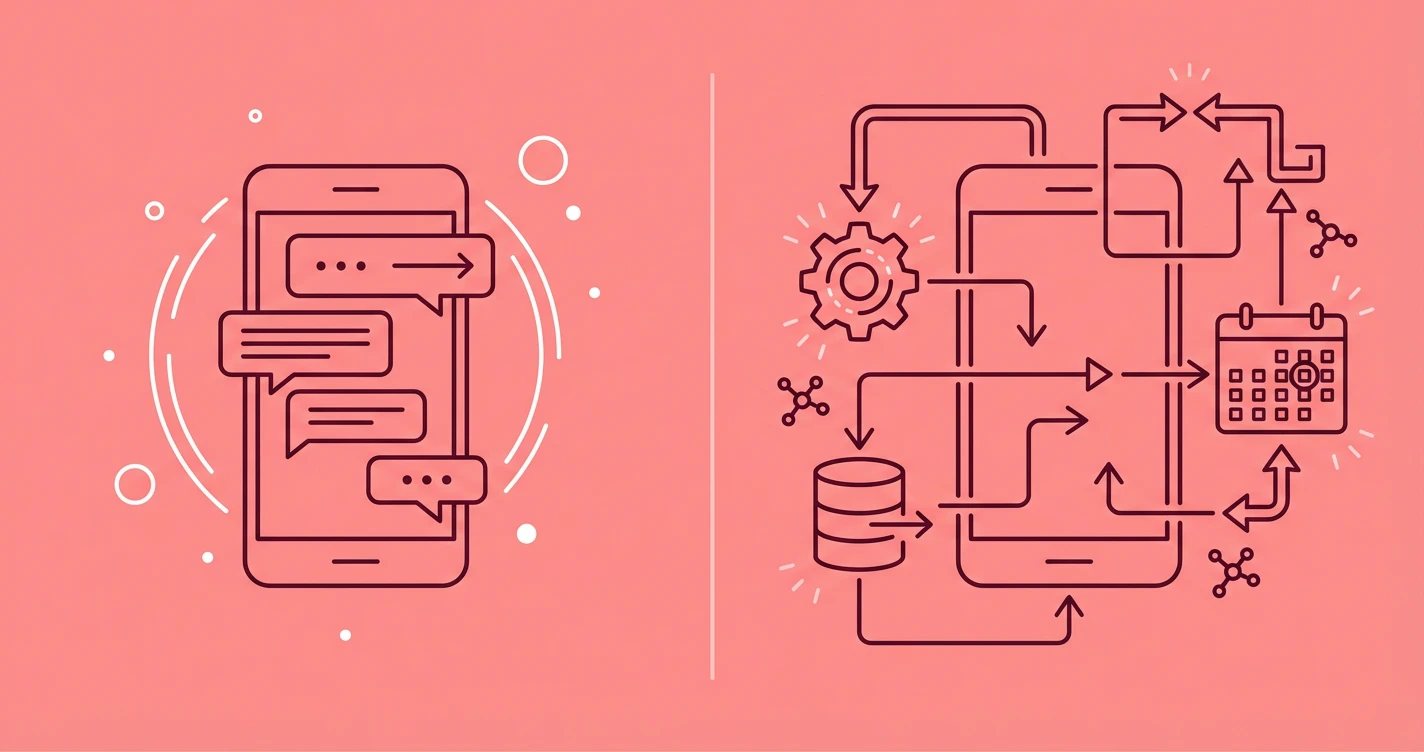

Chatbots talk to you. Agents do work for you.

Chatbots are reactive systems that respond to user input. AI agents are autonomous systems that perceive their environment, make decisions, and execute multi-step workflows without constant human guidance.

Ask ChatGPT to summarize the full text automatically.

What makes a chatbot a chatbot?

Chatbots are conversational interfaces. They wait for user input, process it, and generate a response. These are reactive question-answering systems.

Most chatbots rely on one of two approaches: pattern matching with decision trees or LLM-powered natural language understanding. The second approach has become dominant in 2026, but the core behavior remains the same.

They respond. They don’t initiate.

| Capability | How It Works |

|---|---|

| Input Processing | Receives user query, returns response |

| Memory | Session-based context or short-term history |

| Decision Making | Pre-defined flows or prompt-based routing |

| Tool Use | Limited or none, typically API calls triggered by keywords |

| Autonomy | Zero - requires user input to act |

Chatbots excel at customer service, FAQ automation, and guided experiences because they’re predictable, easy to scope, and don’t surprise users with unexpected behavior.

Traditional chatbots handle 60-80% of common support queries. Advanced LLM-powered chatbots can respond to open-ended questions but still can't complete tasks autonomously.

Chatbots display information but cannot execute workflows. You can ask a chatbot how to reset your password, but it can’t log into your account and do it for you.

What defines an AI agent?

AI agents perceive their environment, plan multi-step workflows, and execute them autonomously. They operate autonomously to achieve goals, not just answer questions. This requires a fundamentally different architecture.

An agent follows the ReAct loop: Reason about the task, take an Action, observe the result, and repeat until the goal is met. This lets agents break complex objectives into subtasks and complete multi-step workflows.

They initiate. They adapt. They persist until done.

| Capability | How It Works |

|---|---|

| Input Processing | Goal-based objectives, not just queries |

| Memory | Three-layer system: working, episodic, semantic |

| Decision Making | Plans multi-step workflows and adapts to feedback |

| Tool Use | Orchestrates APIs, databases, and external services |

| Autonomy | High - executes plans without constant supervision |

An agent can handle tasks like scheduling a meeting with your team next Tuesday by checking calendars, sending invites, and following up with reminders. A chatbot would just tell you how to do it yourself.

The three-layer memory system separates working context from past interactions and learned knowledge. Working memory holds current task context, episodic memory stores past interactions, and semantic memory contains learned knowledge. Together, these let agents learn from experience.

Advanced agents use self-reflection to critique their own outputs. If an action fails or produces unexpected results, they can revise their plan and try a different approach.

Agents integrate with multiple systems simultaneously. They don’t just retrieve data from APIs, they coordinate actions across platforms to complete end-to-end workflows.

How do their architectures differ?

Chatbots and agents require fundamentally different architectures. Chatbots: Single-turn or sequential conversation flow. Input triggers processing, processing generates output, output returns to user. State is maintained in session context or a conversation buffer.

Agents: Continuous perception-action loops. Input sets a goal, planner breaks it into subtasks, executor runs actions, observer checks results, and the cycle repeats. State is distributed across memory layers and tool integrations.

★ = low · ★★ = medium · ★★★ = high

| Component | Chatbot | AI Agent |

|---|---|---|

| Planning System | None or simple routing | Multi-step task decomposition |

| Memory Complexity | ★ | ★★★ |

| Tool Orchestration | ★ | ★★★ |

| Error Handling | Return error message | Retry with revised plan |

| System Integration | ★ | ★★★ |

Chatbots are stateless or near-stateless because they don’t execute multi-step workflows. Agents require persistent state across actions, which means more infrastructure complexity.

Tool use is another dividing line. Chatbots might call one or two APIs to fetch data. Agents orchestrate entire toolchains, using the output of one tool as input to the next, adapting based on intermediate results.

- Goal decomposition system to break objectives into subtasks

- Action executor with retry logic and error recovery

- Memory store for working, episodic, and semantic context

- Tool registry with capability descriptions and usage patterns

- Reflection module to critique and improve outputs

Building an agent requires infrastructure for planning, execution monitoring, state persistence, and failure recovery.

When should you use each?

The decision comes down to one question: Does the user need information or does the user need work done?

Use chatbots when:

- Users ask questions with clear answers

- The interaction is conversational and bounded

- You want predictable, scripted behavior

- The task is displaying or explaining information

- Compliance requires human oversight at each step

Use agents when:

- Users delegate multi-step tasks

- The workflow spans multiple systems or tools

- You want the system to adapt to changing conditions

- The task requires planning and decision-making

- Users expect fire-and-forget automation

| Scenario | Best Fit | Why |

|---|---|---|

| Customer support Q&A | Chatbot | Reactive, information-focused |

| Scheduling meetings | Agent | Multi-step workflow across calendars |

| Product recommendations | Chatbot | Display info based on preferences |

| Data pipeline orchestration | Agent | Autonomous execution and monitoring |

| FAQ automation | Chatbot | Pre-defined answers, no external actions |

| Expense report processing | Agent | Extract, validate, submit across systems |

Chatbots are easier to build, cheaper to run, and simpler to debug.

Agents handle complex, repetitive tasks that require coordination across multiple systems. They also introduce more failure modes because they take actions in external systems without confirmation at each step.

Agents require stronger guardrails and audit trails. If an agent books a flight or processes a refund, you need logs of every decision and action it took. Chatbots just need conversation transcripts.

Many applications use both - a chatbot handles initial user interaction, then hands off to an agent when a task requires execution. This hybrid approach balances user control with automation.

What does the market say?

The market is betting heavily on agents. Enterprise adoption of agentic AI reached 85% by the end of 2025, driven by productivity gains and cost reduction in repetitive workflows.

The AI agent market is growing at 45.8% annually, nearly double the 23% growth rate of traditional chatbots. This reflects a shift from conversational interfaces to task automation.

Salesforce’s Agentforce, IBM’s watsonx Orchestrate, and OpenAI’s Assistants API all launched agent-focused products in the past 18 months. Chatbot platforms are pivoting to include agentic capabilities or risk obsolescence.

By 2026, the agent market is projected to exceed $10 billion while chatbot growth slows. The inflection point happened when LLMs became cheap and reliable enough for autonomous task execution.

If you build chatbot architecture when users expect agent behavior, you’ll face technical debt and architecture rewrites.

Building agent infrastructure for a chatbot use case wastes engineering time and introduces unnecessary complexity.

How do LLMs fit into this?

Large language models power both chatbots and agents, but the way they’re used differs completely.

In chatbots: LLMs generate responses to user input. The model receives a prompt, produces text, and the conversation continues. This is the standard completion API pattern.

In agents: LLMs serve as reasoning engines. They don’t just generate responses, they plan actions, decide which tools to call, interpret results, and adjust strategy. The model is embedded in a control loop.

| Pattern | Chatbot | Agent |

|---|---|---|

| Prompt Structure | User message + context | Goal + state + tool descriptions |

| Response Format | Natural language text | Structured actions or function calls |

| Iterations | One per user turn | Multiple per task until completion |

| Role of LLM | Text generator | Reasoning and planning engine |

Advanced LLM chatbots remain reactive. ChatGPT can answer complex questions and write code, but it won’t autonomously execute a deployment pipeline unless you explicitly design an agent around it.

Function calling capabilities in GPT-4 and Claude don’t make something an agent - orchestration determines whether it’s an agent or chatbot. Chatbots use functions to retrieve data, agents use them to execute workflows.

If the LLM decides when to stop, it's part of an agent. If the user decides when to stop, it's a chatbot.

Vendors call everything an agent now, which muddies the terminology. A chatbot with function calling isn’t an agent unless it autonomously iterates toward a goal without user confirmation at each step.

Can both monetize conversations?

Both chatbots and agents can generate revenue through affiliate links and product recommendations. The approach differs slightly based on autonomy.

Chatbots: Display affiliate links as part of conversational responses. A user asks for headphone recommendations, the chatbot suggests products with embedded affiliate links. Revenue comes from click-throughs and purchases.

Agents: Embed affiliate links into the results of autonomous workflows, such as when an agent researches and compiles a report on the best CRM tools with affiliate links in the final output. Revenue comes from delegated research tasks.

Context-aware monetization works for both chatbots and agents. ChatAds provides a single API call that returns relevant affiliate offers based on conversation history, whether you’re building a reactive chatbot or an autonomous agent.

Chatbots insert affiliate links as users ask questions, while agents insert them as part of completed task outputs.

For chatbots, call the monetization API after each user message. For agents, call it when generating final deliverables or task summaries. Both approaches work, but timing affects user experience.

Agents may monetize better because users delegate research tasks that imply stronger purchase intent. Higher intent means higher conversion rates. See our ranking of AI agent monetization platforms for a detailed comparison, or learn more about measuring revenue per message to track monetization performance.

Frequently Asked Questions

What is the main difference between an AI agent and an AI chatbot?

Chatbots are reactive systems that respond to user input, while AI agents are autonomous systems that perceive, plan, and execute multi-step workflows to achieve goals without constant human guidance.

Can a chatbot become an AI agent?

Not without architectural changes. Converting a chatbot to an agent requires adding planning systems, multi-layer memory, tool orchestration, and autonomous execution loops. It's typically easier to build an agent from scratch.

Do AI agents use chatbots?

Some systems use chatbots as the user-facing interface while agents handle backend task execution. The chatbot collects user intent, the agent executes the workflow, and the chatbot presents results. This hybrid approach is common in enterprise applications.

Are AI agents more expensive to build than chatbots?

Yes. Agents require infrastructure for planning, state persistence, tool orchestration, and error recovery. They also consume more LLM tokens per task due to iterative reasoning loops. Operational costs are 3-5x higher than chatbots for similar use cases.

Can chatbots and AI agents both monetize conversations?

Yes. Both can embed affiliate links in responses or outputs. Chatbots monetize per-message interactions, while agents monetize completed task outputs. Agents may see higher conversion rates due to stronger purchase intent in delegated research tasks.

What is the ReAct loop in AI agents?

ReAct stands for Reason, Action, Observation. Agents reason about the task, take an action using tools or APIs, observe the result, and repeat the cycle until the goal is achieved. This loop enables autonomous multi-step task execution.

Do LLMs make chatbots and agents the same thing?

No. LLMs power both, but they're used differently. In chatbots, LLMs generate text responses. In agents, LLMs serve as reasoning engines that plan actions, decide which tools to use, and iterate toward goals. The architecture around the LLM defines the system type.

Which is growing faster in 2026, chatbots or AI agents?

AI agents are growing at 45.8% CAGR compared to 23% for chatbots. Enterprise adoption of agents reached 85% by late 2025, driven by productivity gains in task automation. The market is shifting from conversational interfaces to autonomous workflow execution.